前言

最近一直在忙(2月份沉迷steam,3月开始工作各种忙),好久没更新博客了,不过也积累了一些,忙里偷闲记录一下。

这个需求是这样的,我之前做了个工单系统,现在要对登录、注册、发起工单这些功能做限流,不能让用户请求太频繁。

从 .Net7 开始,已经有内置的限流功能了,但目前我们的项目还在使用 .Net6 LTS 版本,下一个 LTS 没发布之前,暂时不考虑使用 .Net7 这种非 LTS 版本。

然后我找到了这个 AspNetCoreRateLimit 组件,在 Github 上有接近三千个星星,看了一下文档使用也简单灵活,于是决定尝试一下~

AspNetCoreRateLimit 组件

项目主页: https://github.com/stefanprodan/AspNetCoreRateLimit

这是官方的介绍:

AspNetCoreRateLimit is an ASP.NET Core rate limiting solution designed to control the rate of requests that clients can make to a Web API or MVC app based on IP address or client ID.

The AspNetCoreRateLimit NuGet package contains an IpRateLimitMiddleware and a ClientRateLimitMiddleware, with each middleware you can set multiple limits for different scenarios like allowing an IP or Client to make a maximum number of calls in a time interval like per second, 15 minutes, etc. You can define these limits to address all requests made to an API or you can scope the limits to each API URL or HTTP verb and path.

用最近很厉害的 ChatGPT 翻译一下:

AspNetCoreRateLimit是一个ASP.NET Core速率限制解决方案,旨在基于IP地址或客户端ID控制客户端对Web API或MVC应用程序发出请求的速率。

AspNetCoreRateLimit NuGet包 包含一个IpRateLimitMiddleware和一个ClientRateLimitMiddleware,每个中间件都可以为不同的场景设置多个限制,比如允许IP或客户端在时间间隔内进行最大数量的调用,比如每秒、15分钟等。您可以定义这些限制以处理对API发出的所有请求,也可以将限制范围限定为每个API URL或HTTP动词和路径。

这个组件使用起来挺灵活的,直接在 AspNetCore配置 里定义规则,意味着可以不重新编译程序就修改限流规则,官方给的例子是直接在 appsettings.json 里配置,但使用其他配置源理论上也没问题(配置中心用起来)。

简单介绍下这个组件的思路

首先它有两种模式:

- 根据IP地址限流

- 根据 ClientID 限流

IP地址很容易理解,ClientID 我一开始以为是用户ID,不过看了说明,是一个放在请求头里的参数,比如 X-ClientId,这个要自己实现,可以直接用用户ID。

为了方便使用,我这个项目里面直接用IP地址模式。

RateLimit 组件可以配置全局的限流,也可以配置对某个IP地址(段)进行限流。

配置服务

为了从 appsettings.json 读取数据,先在 Program.cs 注册配置服务

builder.Services.AddOptions();

然后写个扩展方法来注册 RateLimit 的相关服务

引入命名空间

using AspNetCoreRateLimit;

using AspNetCoreRateLimit.Redis;

using StackExchange.Redis;

写个静态类

public static class ConfigureRateLimit {

public static void AddRateLimit(this IServiceCollection services, IConfiguration conf) {

//load general configuration from appsettings.json

services.Configure<IpRateLimitOptions>(conf.GetSection("IpRateLimiting"));

var redisOptions = ConfigurationOptions.Parse(conf.GetConnectionString("Redis"));

services.AddSingleton<IConnectionMultiplexer>(provider => ConnectionMultiplexer.Connect(redisOptions));

services.AddRedisRateLimiting();

services.AddSingleton<IRateLimitConfiguration, RateLimitConfiguration>();

}

public static IApplicationBuilder UseRateLimit(this IApplicationBuilder app) {

app.UseIpRateLimiting();

return app;

}

}

来解析一下配置的代码。

我暂时不需要对不同的IP地址段应用不同的限流规则

所以直接用 IpRateLimitOptions

services.Configure<IpRateLimitOptions>(conf.GetSection("IpRateLimiting"));

要做根据IP限流,就得记录每个IP访问了多少次,RateLimit 组件支持多种存储方式,最简单的可以直接存内存里,不过为了稳定我还是选择 Redis。

这几行代码就是配置 Redis 的。

var redisOptions = ConfigurationOptions.Parse(conf.GetConnectionString("Redis"));

services.AddSingleton<IConnectionMultiplexer>(provider => ConnectionMultiplexer.Connect(redisOptions));

services.AddRedisRateLimiting();

最后注入一下 IRateLimitConfiguration,我猜应该是中间件要用到的。至少我目前在 Controller 代码里不需要用到任何跟 RateLimit 有关的代码。

写完了扩展方法,回到 Program.cs

注册服务

builder.Services.AddRateLimit(builder.Configuration);

添加中间件

var app = builder.Build();

app.UseExceptionless();

app.UseStaticFiles(new StaticFileOptions {

ServeUnknownFileTypes = true

});

app.UseRateLimit();

// ...

app.Run();

我这里把 UseRateLimit 放在 UseStaticFiles 后面,不然页面里的静态文件都被算进去访问次数,很快就被限流了。

配置

在 appsettings.json 里写具体的限流规则。

官网提供的配置规则不能照抄,要理解一下他的文档

EnableEndpointRateLimiting- 这个选项要设置为 true ,不然设置的限流是全局的,不能根据某个路径单独设置限流StackBlockedRequests- 按照默认的设置为 false 就行,设置成 true 的话,一个接口被限流之后再重复请求还会计算到访问次数里面,这样有可能导致限流到天荒地老。

其他的配置顾名思义,懂的都懂。

GeneralRules 是对具体路径的限流规则

如果全局限流,把 EnableEndpointRateLimiting 设置为 false 的话,那就这样设置,1分钟只能访问5次

{

"Endpoint": "*",

"Period": "1m",

"Limit": 5

}

Endpoint 可以设置 HTTP方法:路径 的形式,比如 post:/account/login 具体看文档吧(参考文档第三条)

附上我的配置文件,对添加工单、登录、注册接口进行限流。

{

"IpRateLimiting": {

"EnableEndpointRateLimiting": true,

"StackBlockedRequests": false,

"RealIpHeader": "X-Real-IP",

"ClientIdHeader": "X-ClientId",

"HttpStatusCode": 429,

"IpWhitelist": [],

"EndpointWhitelist": [

"get:/api/license",

"*:/api/status"

],

"ClientWhitelist": [

"dev-id-1",

"dev-id-2"

],

"GeneralRules": [

{

"Endpoint": "*:/ticket/add",

"Period": "1m",

"Limit": 5

},

{

"Endpoint": "post:/account/login",

"Period": "1m",

"Limit": 5

},

{

"Endpoint": "post:/account/SignUp",

"Period": "1m",

"Limit": 5

}

],

"QuotaExceededResponse": {

"Content": "{{ \"message\": \"先别急,你访问得太快了!\", \"details\": \"已经触发限流。限流规则: 每 {1} 只能访问 {0} 次。请 {2} 秒后再重试。\" }}",

"ContentType": "application/json",

"StatusCode": 429

}

}

}

同时自定义了被限流时的提示。

效果如下

{

"message": "先别急,你访问得太快了!",

"details": "已经触发限流。限流规则: 每 1m 只能访问 5 次。请 16 秒后再重试。"

}

扩展

.Net7 内置的限流组件代码: https://github.com/cristipufu/aspnetcore-redis-rate-limiting

这个项目里介绍了几种限流策略,还配了手绘插图,形象生动,有兴趣的同学可以看一下,很有意思。

限流策略

虽然本文不是使用 .Net7 的限流中间件,但这几个限流策略也可以看一下,感觉有参考价值。

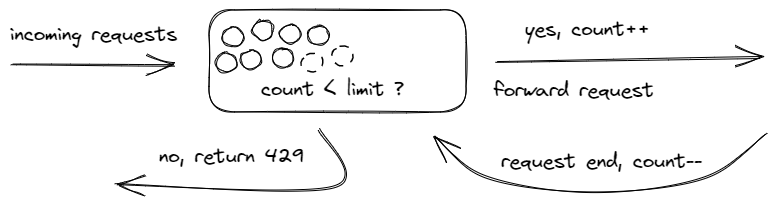

Concurrent Requests Rate Limiting

Concurrency Rate Limiter limits how many concurrent requests can access a resource. If your limit is 10, then 10 requests can access a resource at once and the 11th request will not be allowed. Once the first request completes, the number of allowed requests increases to 1, when the second request completes, the number increases to 2, etc.

Instead of "You can use our API 1000 times per second", this rate limiting strategy says "You can only have 20 API requests in progress at the same time".

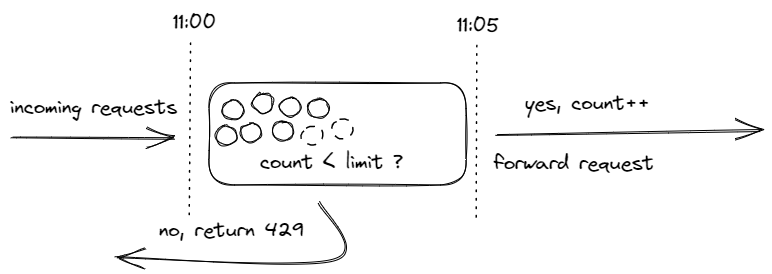

Fixed Window Rate Limiting

The Fixed Window algorithm uses the concept of a window. The window is the amount of time that our limit is applied before we move on to the next window. In the Fixed Window strategy, moving to the next window means resetting the limit back to its starting point.

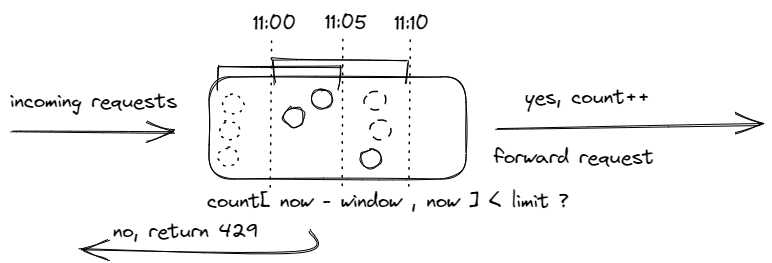

Sliding Window Rate Limiting

Unlike the Fixed Window Rate Limiter, which groups the requests into a bucket based on a very definitive time window, the Sliding Window Rate Limiter, restricts requests relative to the current request's timestamp. For example, if you have a 10 req/minute rate limiter, on a fixed window, you could encounter a case where the rate-limiter allows 20 requests during a one minute interval. This can happen if the first 10 requests are on the left side of the current window, and the next 10 requests are on the right side of the window, both having enough space in their respective buckets to be allowed through. If you send those same 20 requests through a Sliding Window Rate Limiter, if they are all sent during a one minute window, only 10 will make it through.

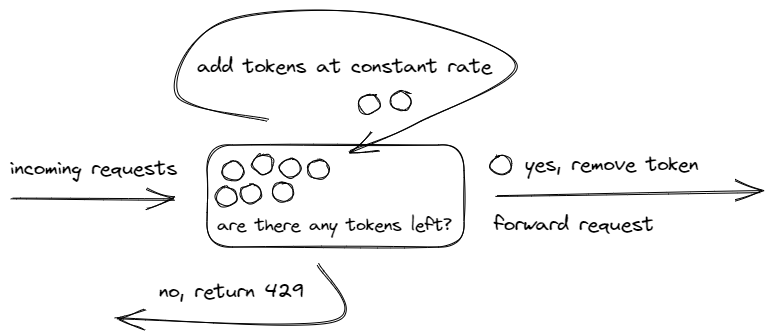

Token Bucket Rate Limiting

Token Bucket is an algorithm that derives its name from describing how it works. Imagine there is a bucket filled to the brim with tokens. When a request comes in, it takes a token and keeps it forever. After some consistent period of time, someone adds a pre-determined number of tokens back to the bucket, never adding more than the bucket can hold. If the bucket is empty, when a request comes in, the request is denied access to the resource.

程序设计实验室

微信公众号

程序设计实验室

微信公众号